Another brick in brain decoding.

Sylvain was interviewed by the magazine Science about a new study published in PLoS Biology reporting the decoding of music from the electrophysiological activity of the brain of participants who were listening to a tune by Pink Floyd (Another Brick in the Wall, Part 1).

Here are the comments Sylvain shared with the magazine, in no particular order, and here is the link to the news piece.

[Un reportage en français sur le même sujet est disponible en ré-écoute de l’émission Les Années Lumières de Radio-Canada, diffusée le 20 août 2023, figurant un autre entretien avec Sylvain.]

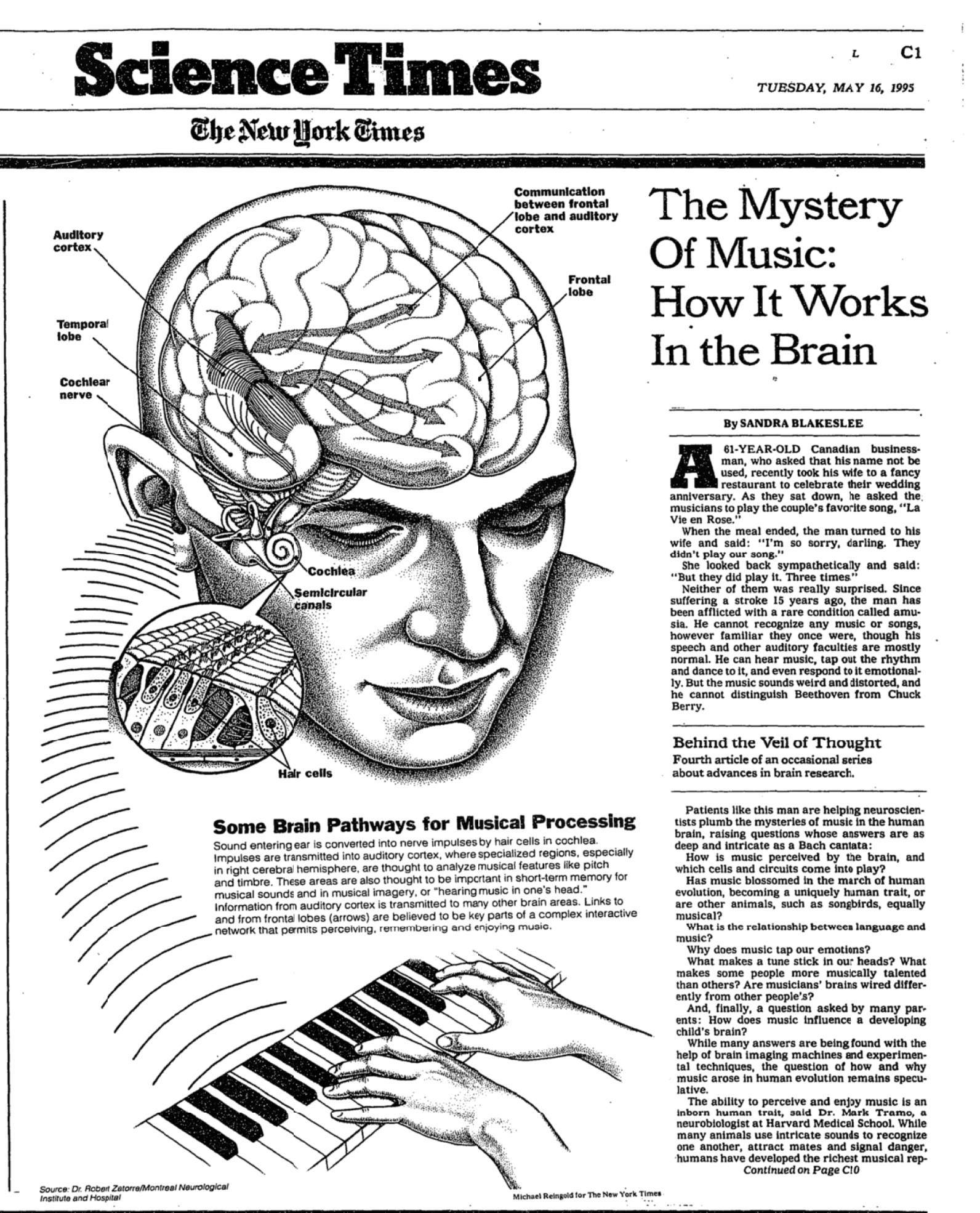

The data were collected from study participants implanted with intracranial electrodes: they were patient volunteers with epilepsy, implanted for clinical purposes for localizing the onset of their seizures. In that respect, the data replicated previous studies that also used invasive recordings in humans for brain decoding purposes. Other decoding studies have used noninvasive alternatives, with neuroimaging techniques ; yet, some aspects of the data quality of invasive recordings are still unmatched. The New York Times explains in a bit more detail which data were used and why.

Over the past decade, the emergence of machine learning techniques for data classification has percolated several areas of neuroscience and neuroimaging research. This study is inscribed in the footsteps of a growing body of previous reports that showed that it is possible, to some extent, to decode elements of information conveyed by human brain circuits. In other words, with data in sufficient volume to train "brain decoders” (a piece of program code which parameters are learned from training on vast amounts of data [here, most of the song played to the participants, except for 15 seconds kept for actually testing the ability of the brain decoders to reconstruct music segments from brain activity]), it is possible to identify what type of movie scene a person is watching, what words they are reading, what movements they intend to make, etc.

Recent studies have shown that it is also possible to decode some of the speech contents a person is listening to. The present study explores how such approaches may also identify musical elements of a tune played to the participants. As other studies in that realm, it may be perceived as a form of experimental stunt of "mind reading", which means it may be a spectacular feat of brain data analysis, but it actually does not advance significantly our understanding of how the brain processes complex stimuli, like music. In that regard, I do find that the present study takes extra strides to make the data decoders neuroscientifically interpretable, to some extent.

For instance, the authors identified which musical features (e.g., harmony, singing portions, rhythmic patterns and transients) were best captured by the brain decoders. One cool way of testing this is by using the generative ability of the decoders, who are trained to associate patterns of neural activity with musical features. You can revert the procedure and generate bits of music from segments of brain activity that were not used for training purposes. You can then compare quantitatively the sound quality, musical contents and other aural features with the actual musical segments played to the participants. The authors show that the music reconstructed from brain activity is fairly well correlated with the original musical bits (about 30% correlation) albeit limited as it only replicates about 20% of the signal contents of the original musical segment [measured as variance explained]. I applaud that the authors shared their data and methods openly, so that other teams can replicate and advance their results. However, when I consulted the data repository, the sound bites reconstructed by the decoders from brain activity were not available for download, although they are cited as available supplementary material. The Science piece does feature excerpts of the original and reconstructed music bits. Of course, in pure audiophile terms, they remain quite far perceptually from each other, but the mood of the tune and some aural features are fairly well reconstituted ; note that the actual lyrics are not decoded, only some of the melodic line of the singing is.

The authors also show that when certain brain regions are removed from the decoders’ sources, the reconstructed music is degraded at various degrees, depending on where and how big these virtual ablations were applied. This is the kind of manipulations that also helps interpret the effects observed. In that respect, their observations confirm previous reports from the extensive literature of the neuroscience of musical perception and cognition, essentially based on non invasive neuroimaging data.

Of course, one catchy aspect of the study is that they used a Pink Floyd song for the experiment. I was curious which song it was, and when they mentioned Another Brick in the Wall, Part 1 as a classic, popular rock song, I at first thought this was a typo. Indeed, the true, classic rock anthem is Another Brick in the Wall, Part 2, which has been immensely popular as a single (4 million copies, topped the charts in 14 countries incl. US and UK).

But they did use Part 1, which is a deeper cut from the original album, a smoother version of the tune, with no drums, few lyrics and a more atmospheric structure. In other words, the complexity of the tune is relatively mild: it is more in the layering of evocative components, than in the more busy, traditional instrumentation and structure (rhythm heavy, choruses, bridges) of a typical rock-pop tune such as Part 2. The relative simplicity of the tune may have helped the decoding performances.

Finally, music appreciation is a very subjective experience. The study did not report how much participants liked or disliked the tune or even how familiar they were with it, which may have affected decoding performances. The age range was pretty broad, from 16 to 60, with an average around 30 y.o., which means that most participants were not born when the tune was published. Some may have heard it for the very first time (again, it is not a mega hit half of the planet has heard before). Does it matter? Certainly, because familiarity forges our perception of the world.

In that regard, I was surprised that the decoders individually designed for each participant did not perform better than the global decoder trained across all participants. This can be explained by electrode implantations in patients being of course driven by clinical needs, not the study needs. This means that each given patient may have at best only a few electrodes implanted in music relevant regions — not enough to design an efficient musical decoder for that particular person. Yet, when the data from the 29 patients were grouped together to design one unique musical decoder for everyone, there were enough common features between the respective brain activities of all these persons, younger and older, Pink Floyd fans and ingenuous, to read out *some* musical patterns from everyone’s brain.

Could this be related to the popularity or the de novo appreciation of the musical piece? We don’t know as this was not tested, but this opens interesting questions, I think.

In a similar vein, would the decoder designed from Another Brick in the Wall, Part 1 work as well for Another Brick in the Wall, Part 2, another Pink Floyd song or a Mozart piece? Probably not. Similarly, would the brain decoder trained with this group of participants perform reasonably well on the brain activity from other patients? I would not bet my LP of The Wall on that (they could have tested that by splitting the group of patients in two: one for training, one for testing).

More broadly, this questions the translational significance, and generalization of the machine learning approach, which is agnostic of the actual neurophysiological mechanisms at play. I would argue that these methods expand the toolkit of neuroscientists in exciting ways, but they do not fully elucidate the remaining hard questions of how we perceive, enjoy and produce complex patterns like music, speech and social interactions. Using more mechanistic, neuro-inspired machine learning architectures may be the way to go and preoccupies many of us in contemporary neuroscience research.

Congrats to the authors on this excellent study!

From the New York Times, May 16, 1995 — note the reference to our dear collaborator and musical cognition specialist, Prof Robert Zatorre.